Introduction

Milan Spiridonov, 15th October 2024

This blog will be used as a means for me to share progress in the personal challenge I've picked during the AI For Society minor.

For this semester we are called to work on two projects:

- A group project, which is for a 3rd party. This part I will not be writing much on.

- A personal challenge, which should serve as a playground for us to extend on our knowledge, individually find challenges & learn technical skills by tackling them. This project is what I will be expanding on in this blog.

Since we're given freedom to choose our own project, it's expected of us to write up a proposal which clearly states the targets of this project, why we've chosen it and what we expect to learn from it.

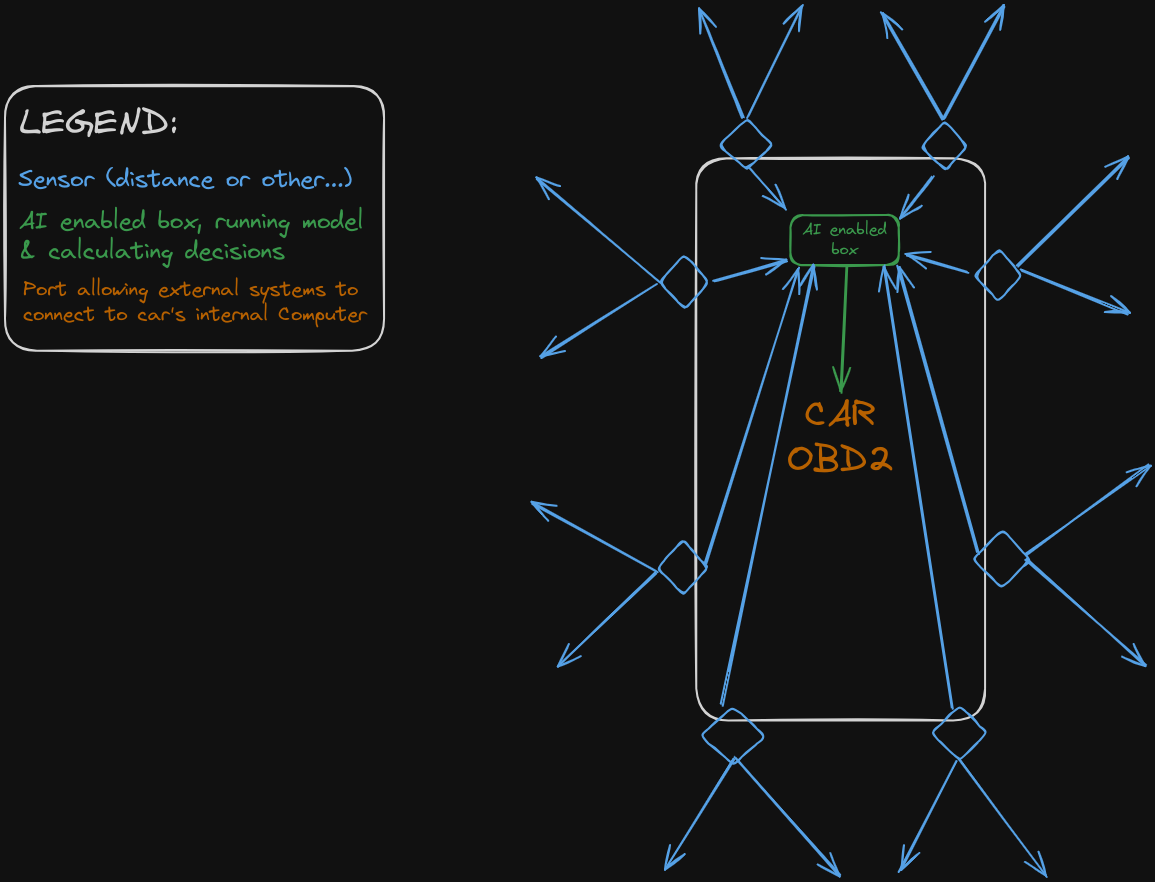

Since I am your average guy, I immediately came to the conclusion that I want to work on something which has to do with cars. This something for me was initially an AI which turned a normal car into a fully autonomous, self-driving car. After some thought, I wisely decided against this, and pivoted to a more realistic idea - an AI Driving assistant. This would, in an ideal world where I've physically implemented the solution, be realized as a small device - connected to a bunch of sensors and the car's OBD2 port - which runs the AI model on-board, making decisions based on the sensors, and sending the decisions to the car's computer.

After discussing my ideas with two of the Fontys consultants - Coen Crombach (Technical) & Hans Konings (Contextual), I ended up receiving their approval to start working on my Project Proposal. In it I described in further detail the ins-and-outs of the project (as far as I was able to predict at that stage)

Overview of Project

Up until now I've only given you a rough idea of what the project might look like, but I haven't gone into much detail. Now might be a good time for that :)

As I stated already, my ideal scenario would be a small machine, locally running an AI model, which reads sensor data & transmits data (inputs) to the car's computer.

The use of sensors in this case should somewhat emulate human perception, but maybe even reach a higher level than that, since we could calculate all kinds of different values, which tell us a lot more about any given situation than the human eye ever could.

Using the sensors to continually collect data (both for usage, but also for training), we'd be able to feed the model with inputs pretty fast and often, meaning we also receive outputs from the model at a fast & constant rate. This would mean that even if we're unable to make our AI fully autonomous, having an AI system which is constantly watching the road alongside us would have the great upside of quick interventions. If the AI system knew whento slam the breaks (imagine some unexpected obstacle shows up on the road), the AI could (if all goes well) react way faster than virtually any human would.

Although with the years I've grown quite contemptful of Microsoft, I am tempted to name this project 'Car Copilot™️' (if not for any other reason, at least to spitefully place a trademark on the name)

So… how real is this?

Having said all of that, I must mention that I have no car myself. And since none of my friends expressed a desire to volunteer their cars as a tribute to my silly little school project, the car we'll be controlling will be as real as that NFT I lost money on 2 years ago.

I decided to create a simulated environment, which will emulate the main (user's) car, a road, cars riding on the roads in different ways, etc…

For this environment to be viable for my project, I have to make sure it allows for the following:

- Have a controllable car, which acts in a (somewhat) physically realistic way, reacting to inputs (gas/brake pedals, steer amount & direction, etc…).

- The script managing my inputs should also have an interface for outer influences (i.e the AI Model), so that I can simulate my AI model actually intervening while a driver is behind the wheel.

- Have roads, with specific marking, etc…

- Have other (npc-like) cars, which drive with different speeds & have specific behaviors, to allow for simulating various scenarios.

- Simulating sensors, calculations & data collection

- A nice (at-least usable) UI, which displays different values (driver/AI inputs, car state, etc…)

Creating the simulated environment

As I typically do, I went into creating the environment with a lot of enthusiasm, deciding to make it in pygame, where I'd make my own physics engine (to have better control over the realism of course!), as well as everything else that might be required of this environment.

After a week of messing around, I realized how much time I'm wasting, especially since I'm not very familiar with either pygame or Python itself. Upon this realization, I finally succumbed to just using the Unity game engine, where I started making progress almost immediately.

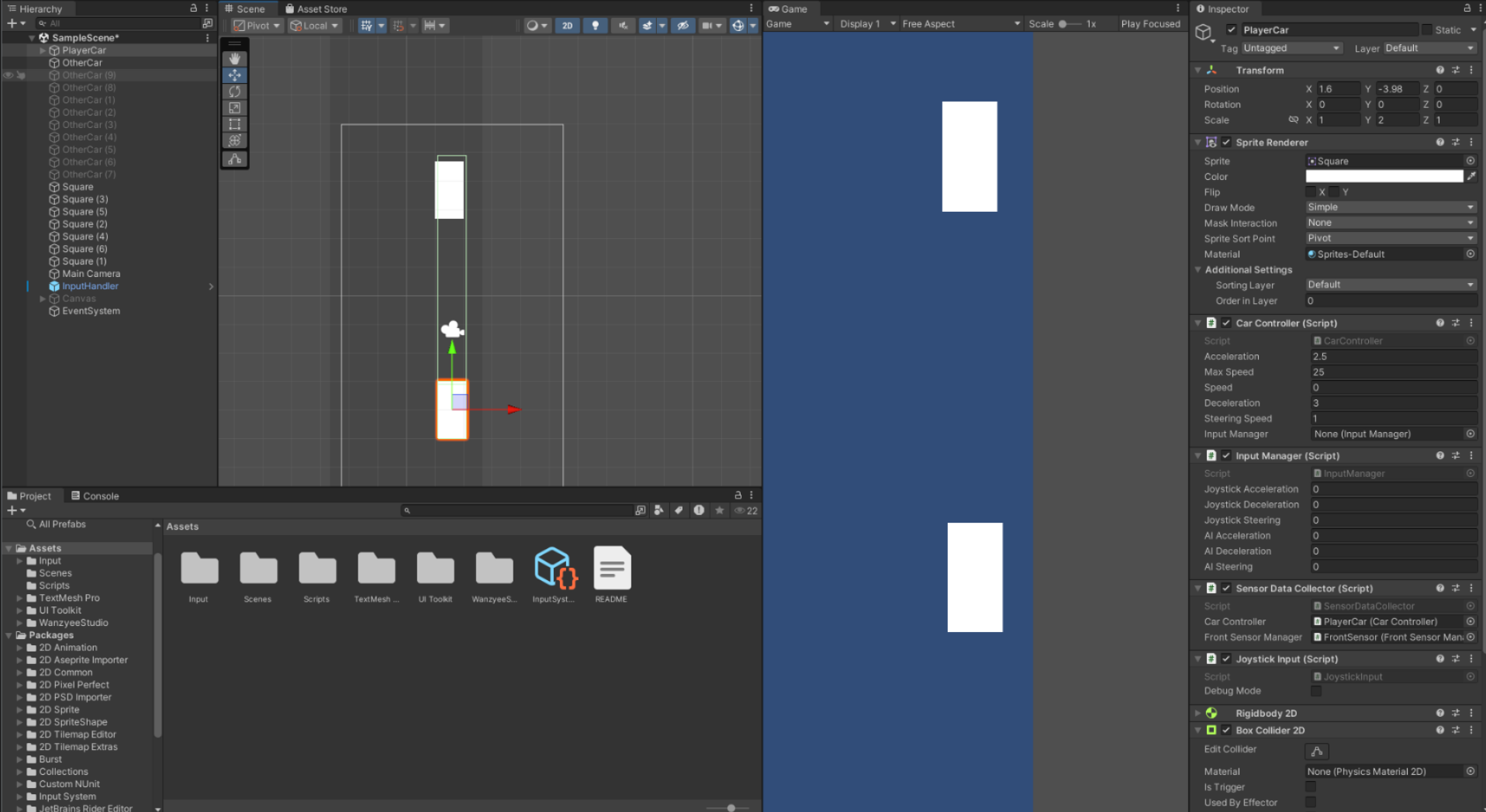

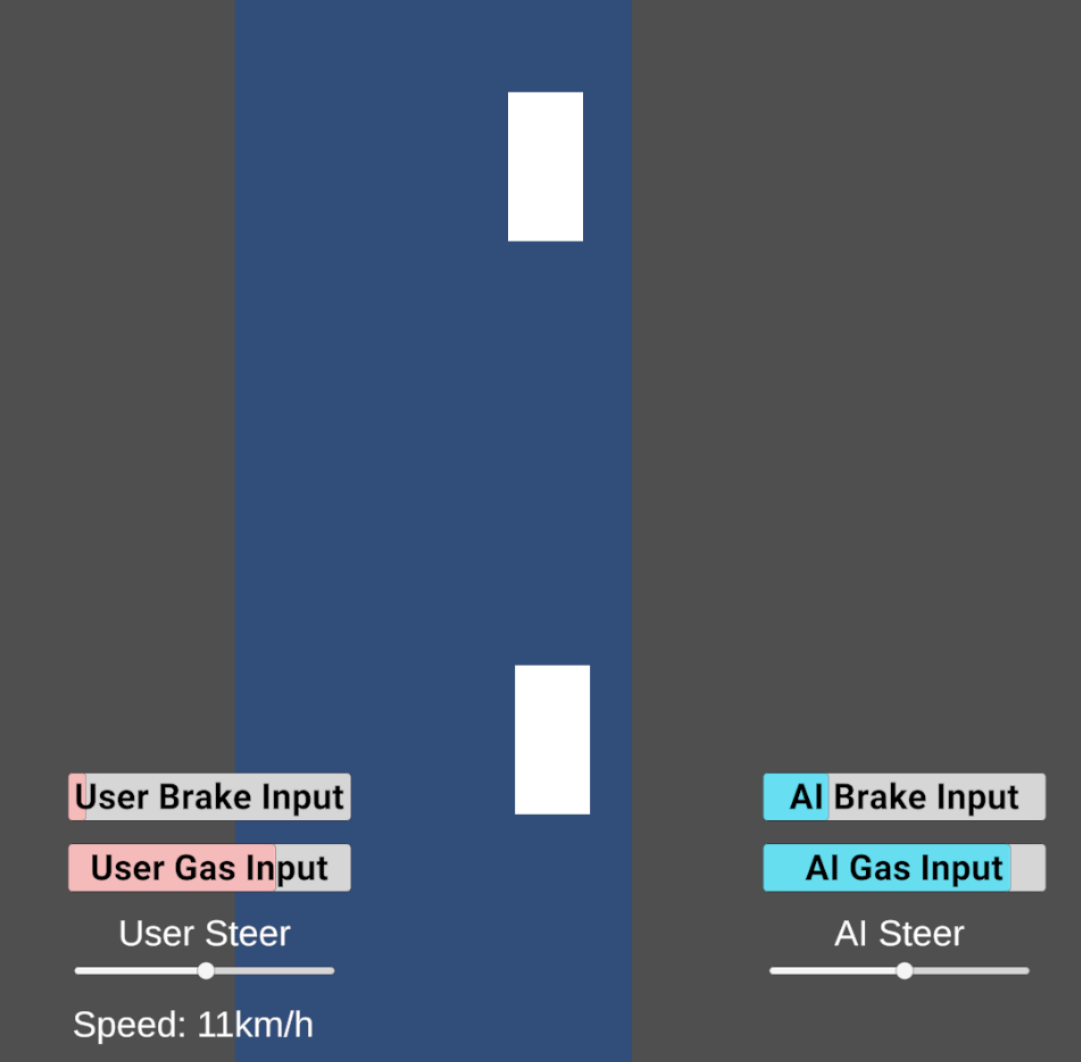

Very quickly I created my car (white rectangle 1), which had it's own CarController & InputManager (collecting input from 3 separate sources).

The current iteration of my car only has 1 sensor - a big front-facing sensor, which act as a sonar sensor on steroids - it reads the distance from my car to the closest object it can detect (~5m range)

This sensor data is then gathered, alongside other data about the current frame (since this is virtual, we deal with frames, where we currently process 60 frames every 1 second, or 1 frame every 16ms, or to put it in more common terms - 60fps )

(also the scene itself can be seen as one long lane (or rural road with 2 unmarked lanes), surrounded by 2 immovable walls) - this setup will be improved (in terms of realism but also visually) later down the road (ba-dum-tss)

The are also other cars on this long road (see white rectangle 2, above), which currently can only move with a set, constant speed.

Using this configuration, I was able to simulate a very basic scenario, where the driver is driving behind a car, that's keeping a constant speed of 10km/h.

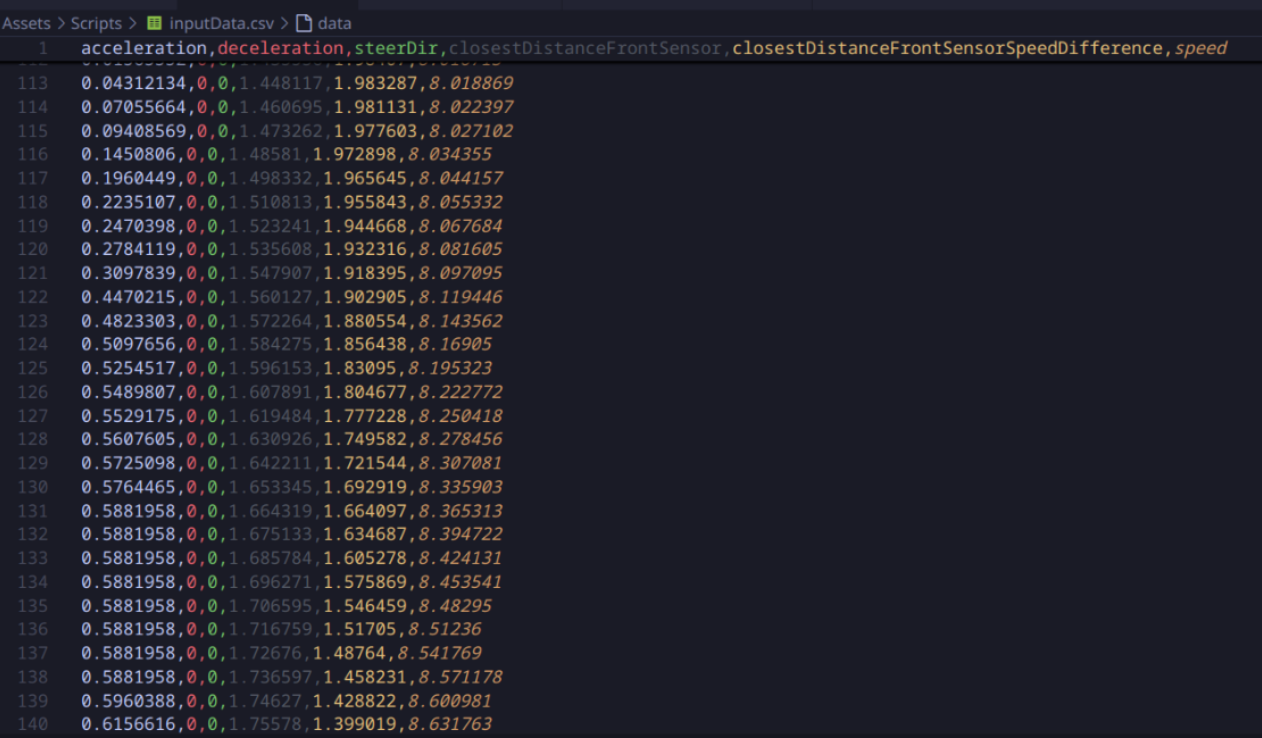

Having this example situation, I went on to create a data-collection script, which gathers all kinds of data and writes it to a .csv file, to be used later for model training.

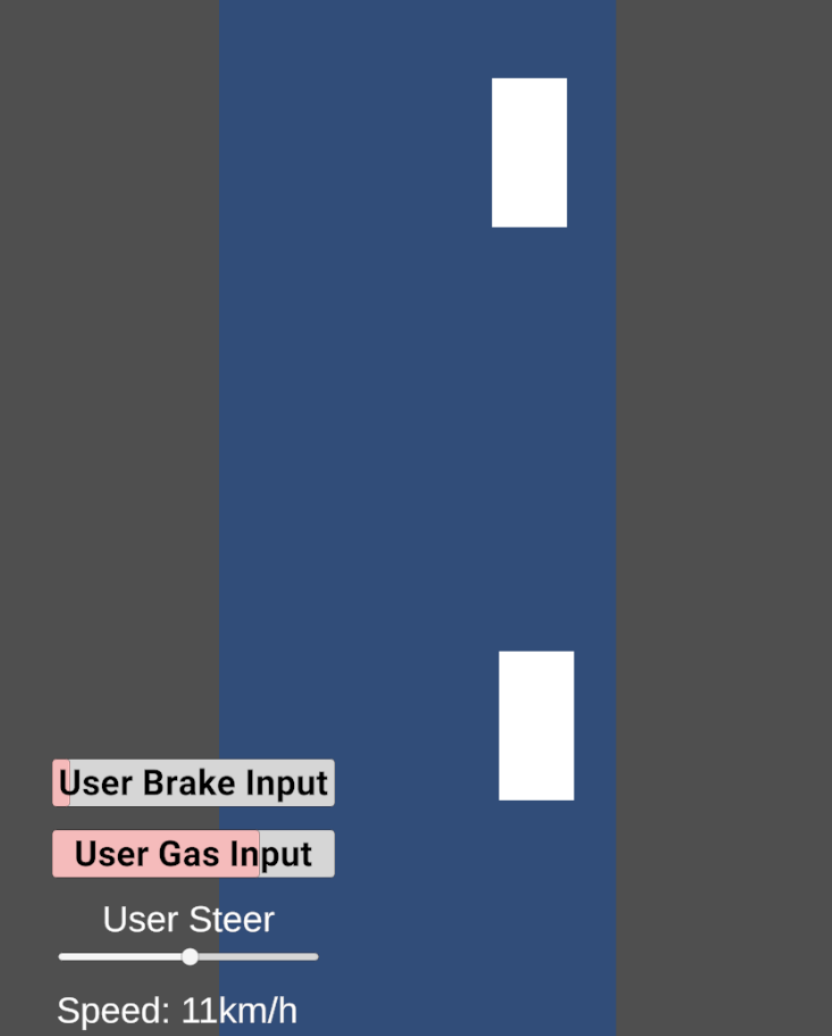

In order to make it easier for me to know what's happening while collecting data, I also created some very simple UI elements, to simulate a car's dashboard.

AI stands for alotof ifs

Since I have no prior experience with AI & Machine Learning, this aspect of the project was simultaneously the most interesting and most intimidating. Luckily, we already had a couple of lectures on the basics of Machine Learning and Neural Networks, where besides receiving some ground-level understanding of the concepts, we also received a couple of demos & examples on how to train our own models.

While scratching my head in front of my freshly installed copy of Jupiter Notebook, I remembered the demos we were given (at least for python) were using the scikit-learn library, which contains all kinds of useful tools for training ML models.

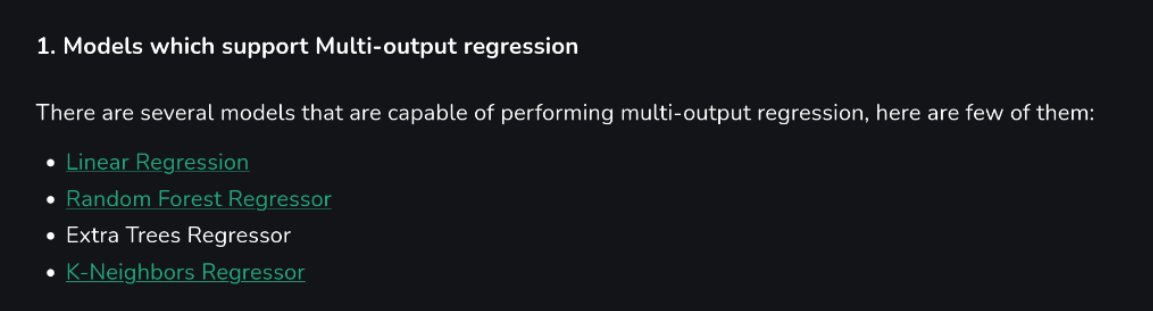

Since I plan on giving the AI model multiple input fields & multiple output fields, I knew I had to figure out which algorithms would support that, so I went on to Google, and found this article , which lists a couple of algorithms that allow for that.

I chose to go with Random Forest Regressor first, since I found the name to sound the coolest.

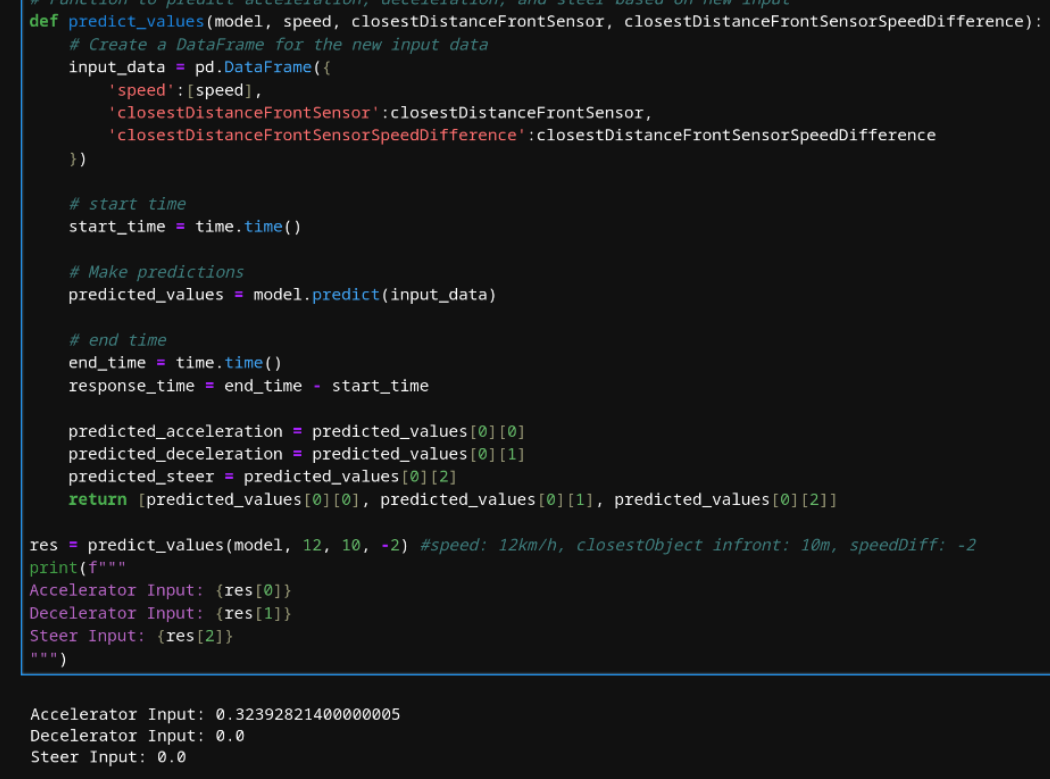

After installing the library and consulting my dear friend ChatGPT on how to load a CSV into python, I very quickly was able to create a simple script, which trained a model on RandomForestRegressor, and have it spit something out!

Now, it would make me a liar to say I 100% understand the inner workings of Machine Learning in general, let alone the RandomForestRegressor, but by doing this exercise, I gained some confidence that the project is doable.

I mean, I already got it to spit out some numbers, so it wouldn't be too hard to make it spit out more numbers, and to make sure they make sense, right? RIGHT?!

Well… I guess that's an issue for future Milan, because having something gave me quite the sugar-high, and I immediately modified the script to start a web-socket server after finishing with training, so that I could hook up my virtual InputManager to the AI model.

Car Copilot™️

In order to have my simulated car communicate with the AI model, I only needed to implement some sort of web-sockets client (from my InputManager), which was quite easy to do.

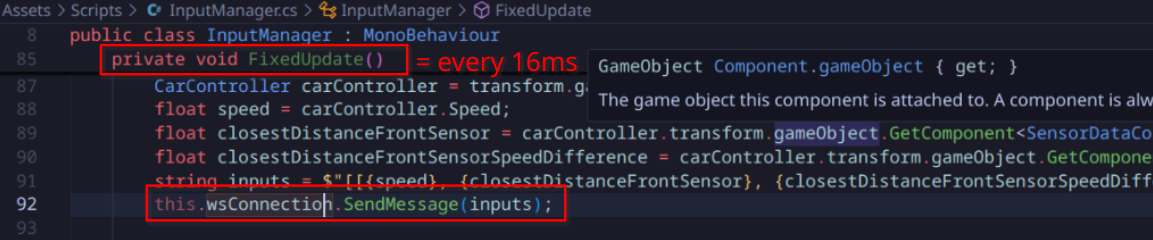

Now, to be able to have constant feedback from the model, we'd have to continually hit the model with new values. To do this, I simply send a message to the model very 16ms:

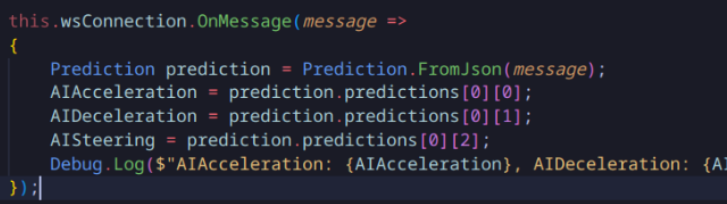

, and handle the responses as they come:

But since I do not expect the model to come anywhere close to useful at this stage, instead of hooking it up to the CarController immediately, I decided to only add it's signals to the UI, as to have it "say" what it wants to do at any given moment:

Having completed this setup, I was finally able to drive the car in any given scenario and also get remarks from the AI model on what it would do, were it driving instead of me (backseat-quarterback style).

Conclusion

All in all, this past month was pretty successful, as I believe I managed to get my toes wet in the ✨endless pool of mysteries✨ that is machine learning, all be it at a very surface level.

For the next month, I plan on extending the virtual environment by:

- adding some eye-candy, as I've noticed that people are always initially confused when I try showing them something

- adding actual lanes, so the system will have some conception of where it's positioned on the road

- creating different isolated scenarios, so that I can collect data (and test) the model more effectively

- add support for multiple model inputs, so that I can train on different algorithms, and see how they compare to each other (by putting them side-by-side)

- (maybe) add a switch, which allows the AI to take control over the car

Also I'd be interested in learning more in depth about the different ML algorithms, as well as (maybe for next-next time) looking at Reinforcement Learning.

As for some of the more societal issues, I plan on speaking to a Fontys psychology consultant, to gather insights on how best I could integrate the AI functionalities, so that they:

- Actually do something

- But at the same time don't contribute to a dreadful experience

My dearest of fans, thank you for your time!